GIH Blog and News

Reimagining Rural Health and Well-being

To inform positive change, Grantmakers in Health (GIH) and the National Rural Health Association (NRHA) are partnering to reimagine a unified vision for health and well-being in rural America. The Georgia Health Policy Center (GHPC) was engaged to conduct a landscape analysis and facilitate listening sessions with rural health stakeholders at the local, state, and national levels.

Philanthropy @ Work – Transitions – January 2026

The latest on transitions from the field.

GIH Bulletin

GIH Bulletin: October 2025

If research evidence falls in the vast forest of journals and grant reports, but no one outside hears it, can it inform policy? In today’s political climate, grantmakers must do more than generate knowledge. They must ensure that its real-world impact is visible, measurable, and defensible.

Read More →Reports and Surveys

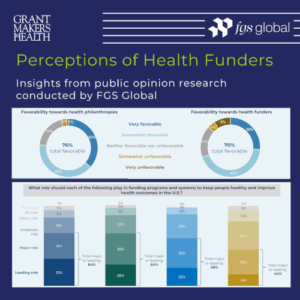

Survey Findings: Perceptions of Health Funders

In July and August 2025, Grantmakers In Health (GIH) conducted research to help health funders better understand how they are viewed by the public. The research included an online survey of engaged voters nationwide and an online focus group with Washington, DC, policy professionals.

An overview of the survey and online focus group findings is now available to all GIH Funding Partners. In addition, this overview was presented on Thursday, November 20, 2025, at the 2025 GIH Health Policy Exchange in Arlington, VA.

GIH Health Policy Update Newsletter

An Exclusive Resource for Funding Partners

The Health Policy Update is a newsletter produced in collaboration with Leavitt Partnersi and Trust for America’s Health. Drawing on GIH’s policy priorities outlined in our policy agenda and our strategic objective of increasing our policy and advocacy presence, the Health Policy Update provides GIH Funding Partners with a range of federal health policy news.